On Testing

It takes an extreme amount of effort to prove that your code does what you say it does. The next best thing is to run a battery of tests to it, and prove that it works as expected under all those situations.

Why Test?

- Testing takes time. Time that is hard to justify to project managers.

- Why would you need to write tests, if after you've finished debugging you know it works?

- What is the reason for QA, if you are testing it yourself?

- Its such a bore! Why do it?

Because it is our responsibility.

The software we write has real consequences, we run Electronic Vehicles, Medical Equipment, and plenty of other dangerous use cases.

Software development is one of the least regulated industries. There is no government intervention, no bodies to ensure we are doing things right, yet we have the biggest reach out of all jobs. All other jobs have to take oaths, we don't, and we love it. We are all essentially self taught. No exam we need to pass.

It's odd. Every 5 years the number of programmers double. All these new people in the industry.

It is our responsibility to learn, and share what we think is right. To talk about it. It is our responsibility to test.

OK, I get it, we can hurt people, but my use case isn't THAT dangerous. So again, why test?

Because it's rude not to

Lets say you work at a company, write code, then leave, and another developer comes a long and uses your code or needs to make a small change.

That person can either run the test suite you created to see if everything is working as expected after their change, or would have to understand how each component works, and make sure there aren't any side effects manually.

Because its actually faster to write tests.

If you want to deploy new features often, and ensure the quality of your code remains in tact, a testing suite is the only viable approach. Manual tests take a lot of time, and aren't efficient. If you send your code to QA, and it has errors, you will look bad, it will take more time, and you'll need to go back and forth, and you have no control if you will hit your deadline.

Because it increases code reusability.

If you're functions/classes are tested, then you are more likely to re-use them, and have others use them. Any open source package will have this in mind because its a necessity, no in person communication is there, your code needs to speak for itself.

Alright, I hear you. It seems like this "Testing" thing could be worth it, so how do I do it? The scope of it seems endless, do I just test everything?

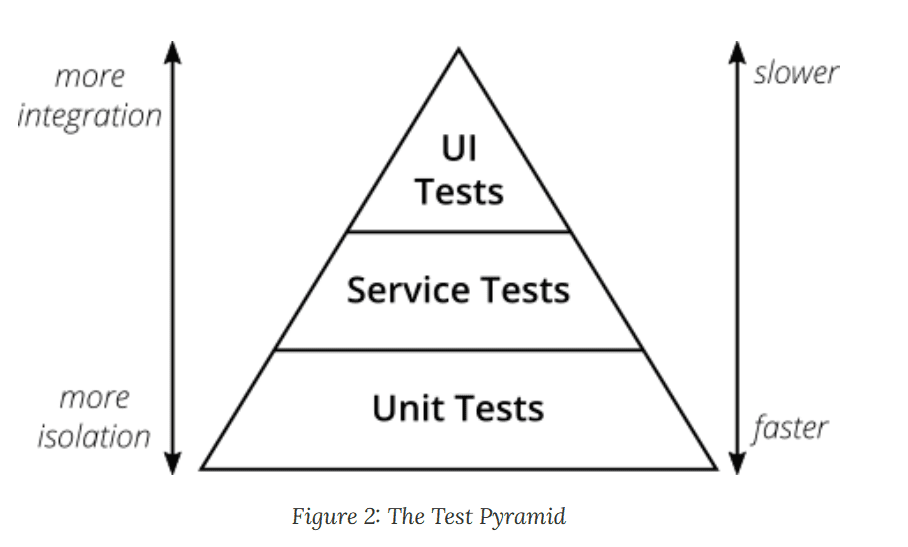

Practical Test Pyramid

"Test Pyramid" -> Mike Cohn. Visual metaphor, tells you how much to do at each layer.

Testing approaches change, but these two principles will apply:

- Write tests with different granularity

- The more high-level you get, the fewer tests you should have.

Essentially this translates to writing

- many small unit tests,

- a decent amount of integration/functional tests, and

- very few E2E tests.

A well-rounded test portfolio should look to be responsive, reliable and maintainable.

Have a goal of Continuous Delivery:

Automatically ensure that your software can be released into production at any time. -> Use a build pipeline to automatically test your software, and deploy it to test and production environments.

Unit Tests: What are Units?

Units for functional programming are functions. For OOP, it can range from a single method to a class.

Pretty simple. Now lets go further, there are two types of unit tests.

Sociable and Solitary Unit tests:

- Sociable: Allow tests to talk to real collaborators(real databases or endpoints, or classes).

- Solitary: Stub all collaborators, and isolate the functionality thats being tested. To avoid side effects.

You want to keep your test suite light, and fast, so you can run it over and over again while making changes to ensure you aren't going backwards. With this in mind external real dependencies provide more confidence that things are working, but at the expense of consistency, and time.

Test Doubles: Mocking and Stubbing

Replace a real class/module/function, with a fake version.

- The fake version answers to the same method calls, and can be used just like the real, but answers with canned responses, that you define yourself.

Its like assuming the object will work, so you can test specific functionality, thus mocked/stubbed things should always succeed.

Mocks are like Stubs in that, you pre-program them with expectations/ Canned answers. The difference is that mocks fail unless all expectations are looked at while stubs don't.

Mocks and stubs keep unit tests light and fast, and allow you to run thousands of tests within a few minutes.

Stub out external collaborators, set up some input data, call your subject under test and check that the returned value is what you expected.

Use Test Driven Development or TDD

Create a test that fails initially, but then will succeed when you create the Unit correctly. This way you don't need to do anything after the fact, and diagnosing is easier.

What Should you Test?

A good rule of thumb: One test class per production class.

- A unit test class should at least test the public interface of the class. Interface is a contract that must be implemented. If you test the interface, then all other methods/functionality programmed for that interface will work on it.

Private methods do not need to be tested, because they are assumed to be "Implementation methods", essentially the internals in order for the public methods to work.

All non-trivial code paths should be tested, both happy path, and edge cases.

You look at testing functions as an observer. Like inserting a quarter into a vending machine, will the item you requested come out? If you put an invalid item what will happen? You aren't interested in the logic for how the item is delivered, or how things function, only the result.

This adds greater flexibility because, if a "Refactoring" (same functionality, different implementation.) is needed, then the unit test won't need to be rewritten.

Don't test trivial code: You won't gain anything from testing simple getters or setters or other trivial implementations

Another way of looking at what to test:

Put an object into each "interesting" configuration, and test them. Configurations:

- Constructors, (When creating the object, will it work as expected for each state?)

- Mutators, (getters, setters, methods that change data, typically only test these for complicated non 1 to 1 implementations.)

- Iterators, (Test that they iterate as expected.)

- Accessors(queries). (Fetch private data stored in an object. Test these.)

We write two unit tests - a positive case and a negative case for all the above.

Arrange, Act, Assert:

Arrange

Setup the object to be tested, surround with collaborators, use Mocks for testing.

Act

Act on the object through some mutator. Give it parameters.

Assert

Make claims about the object, its collaborators, parameters.

Integration Tests:

They test the integration of your application with all the parts that live outside of your application. (databases, filesystems, network calls to other applications)

Write integration tests for all pieces of code where you either serialize or deserialize data, or get data from external sources.

Why? To build your confidence that given no issues with upstream dependencies your code will work.

Integration Test for a database:

- Start a database

- Connect your application to the database

- Trigger a function within your code that writes data to the database

- Check that the expected data has been written to the database by reading the data from the database

Integration Test for an External API:

- Start your application

- Start an instance of the separate service (or a test double with the same interface)

- Trigger a function within your code that reads from the separate service's API

- Check that your application can parse the response correctly

Other Examples: Reading from and writing to queues, Writing to a filesystem.

Aim for Narrow integration tests:

- Spin up a local MySQL database, instead of the real thing.

- If other systems, like an API, build a fake version to mimic the behavior.

Avoid integrating with the real production system in your automated tests. Create a TEST instance. You don't want test logs in production.

Broad integration tests are over the network, with real components and makes your tests slower and usually harder to write.

If you mock external components, you can insure they stay correct via contract testing.

Contract Testing

With microservices, comes way more components interacting, and potentially a lot more integration tests.

These services need to communicate with each other via certain well defined interfaces/contracts. (These can change.)

Providers serve data to consumers, consumers process data obtained from providers.

Could publish data to a queue, or make an API for consumers to access data from.

Automated contract tests serve as a good regression test to make sure deviations from the contracts are noticed early in your build pipeline.

If you have an API, and you change the external or internals, contract testing ensures the changes implement won't effect downstream consumers.

CDC - Consumer Driven Contact Tests:

- Consumers write tests for all data that they need from the interface. The consuming team sends it to the provider team, and they can use these tests to ensure that all the data needed is available by running the CDC tests.

This allows the producer to only implement whats necessary. YAGNI.

- CDC tests will fail, preventing breaking changes to go live

For microservice approach: CDC tests are a big step towards establishing autonomous teams

Pact is the most prominent software for these.

UI Tests:

- You can write unit tests with backend stubbed out.

- If you need to step through UI, you can use selenium, compatible with most languages.

Selenium is good for E2E tests as well.

Here are some practical testing setups for you to use:

Testing Setups:

Nodejs Examples

- Mocha , Chai (an assertion library), Sinon (stubbing/mocking library), Istanbul for code coverage. Chai-as-promised (assertions for promises), chai-http (used for making http calls.) -> This setup allows more logical operators, such as && and ||'s. -> Provides a lot more flexibility.

- Jest provides - assertion, stubbing, and mocking libraries under the hood.

- Input, expected output, assert.

Java example:

- JUnit (test runner), Mockito (mock data), Wiremock(stubbing external services), Pact (writing CDC/Contract tests), Selenium (UI Driven - E2E tests), Rest-Assured (REST API E2E tests.)

Outro

The common age for developers used to be above 30 years old. Discipline and responsibility came with age. With the number of new developers each year, they need to find their way. Testing is a way to give structure, and build confidence in your code.

This has been a letter from a coder.

Best Regards, Tyler Farkas

Before you leave

We know, not another newsletter pitch! - hear us out. Most developer newsletters lack value. Our newsletter will help you build the mental resilience and emotional intelligence you need to succeed in your career. When’s the last time you enjoyed reading a newsletter? Even worse, when’s the last time you actually read one?

We call it Letters, but others call it their favorite newsletter.